Logarithm: A logging engine for AI coaching workflows and providers

- Systems and application logs play a key role in operations, observability, and debugging workflows at Meta.

- Logarithm is a hosted, serverless, multitenant service, used only internally at Meta, that consumes and indexes these logs and provides an interactive query interface to retrieve and view logs.

- In this post, we present the design behind Logarithm, and show how it powers AI training debugging use cases.

Logarithm indexes 100+GB/s of logs in real time, and thousands of queries a second. We designed the system to support service-level guarantees on log freshness, completeness, durability, query latency, and query result completeness. Users can emit logs using their choice of logging library (the common library at Meta is the Google Logging Library [glog]). Users can query using regular expressions on log lines, arbitrary metadata fields attached to logs, and across log files of hosts and services.

Logarithm is written in C++21 and the codebase follows modern C++ patterns, including coroutines and async execution. This has supported both performance and maintainability, and helped the team move fast – developing Logarithm in just three years.

Logarithm’s data model

Logarithm represents logs as a named log stream of (host-local) time-ordered sequences of immutable unstructured text, corresponding to a single log file. A process can emit multiple log streams (stdout, stderr, and custom log files). Each log line can have zero or more metadata key-value pairs attached to it. A common example of metadata is rank ID in machine learning (ML) training, when multiple sequences of log lines are multiplexed into a single log stream (e.g., in PyTorch).

Logarithm supports typed structures in two ways – via typed APIs (ints, floats, and strings), and extraction from a log line using regex-based parse-and-extract rules – a common example is metrics of tensors in ML model logging. The extracted key-value pairs are added to the log line’s metadata.

Figure 1: Logarithm data model. The boxes on text represent typed structures.

AI training debugging with Logarithm

Before looking at Logarithm’s internals, we present support for training systems and model issue debugging, one of the prominent use cases of Logarithm at Meta. ML model training workflows tend to have a wide range of failure modes, spanning data inputs, model code and hyperparameters, and systems components (e.g., PyTorch, data readers, checkpointing, framework code, and hardware). Further, failure root causes evolve over time faster than traditional service architectures due to rapidly-evolving workloads, from scale to architectures to sharding and optimizations. In order to triage such a dynamic nature of failures, it is necessary to collect detailed telemetry on the systems and model telemetry.

Since training jobs run for extended periods of time, training systems and model telemetry and state need to be continuously captured in order to be able to debug a failure without reproducing the failure with additional logging (which may not be deterministic and wastes GPU resources).

Given the scale of training jobs, systems and model telemetry tend to be detailed and very high-throughput – logs are relatively cheap to write (e.g., compared to metrics, relational tables, and traces) and have the information content to power debugging use cases.

We stream, index and query high-throughput logs from systems and model layers using Logarithm.

Logarithm ingests both systems logs from the training stack, and model telemetry from training jobs that the stack executes. In our setup, each host runs multiple PyTorch ranks (processes), one per GPU, and the processes write their output streams to a single log file. Debugging distributed job failures leads to ambiguity due to lack of rank information in log lines, and adding it means that we modify all logging sites (including third-party code). With the Logarithm metadata API, process context such as rank ID is attached to every log line – the API adds it into thread-local context and attaches a glog handler.

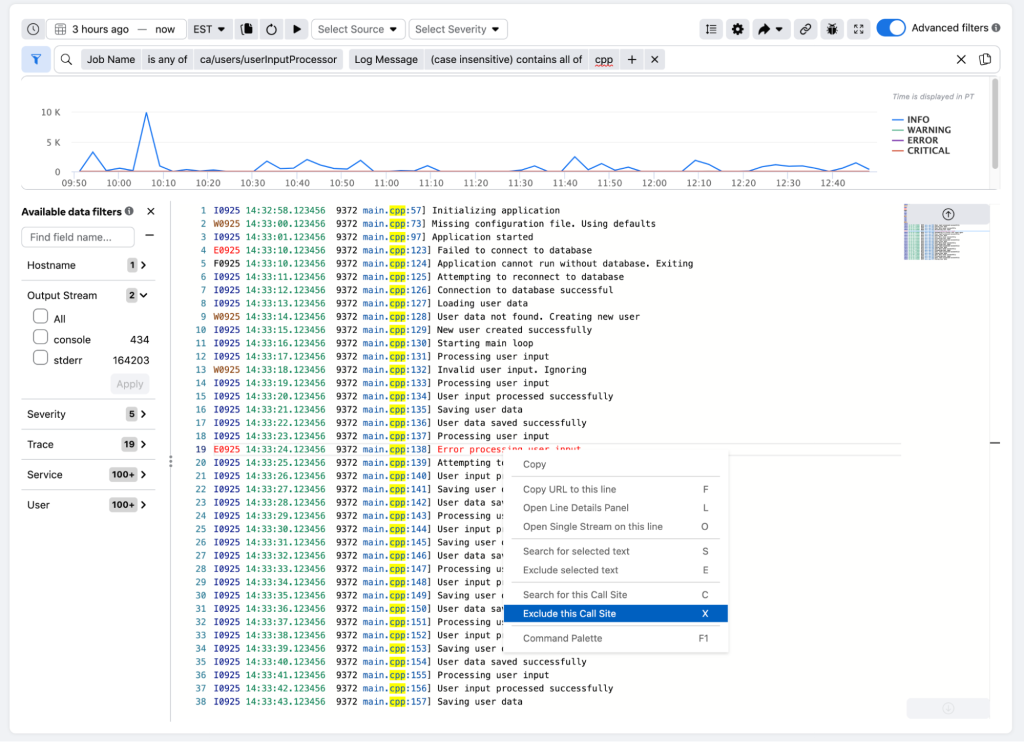

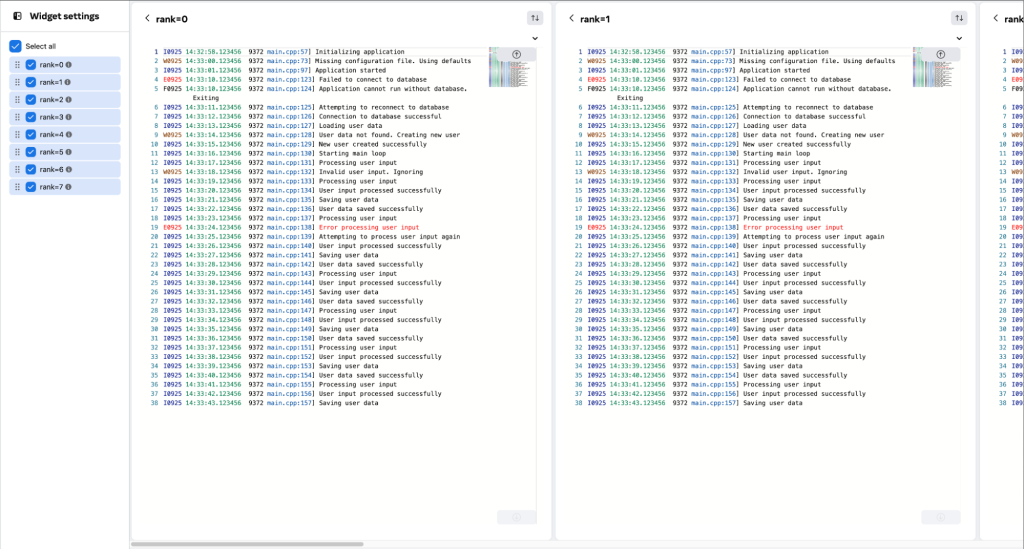

We added UI tools to enable common log-based interactive debugging primitives. The following figures show screenshots of two such features (on top of Logarithm’s filtering operations).

Filter–by-callsite enables hiding known log lines or verbose/noisy logging sites when walking through a log stream. Walking through multiple log streams side-by-side enables finding rank state that is different from other ranks (i.e., additional lines or missing lines), which typically is a symptom or root cause. This is directly a result of the single program, multiple data nature of production training jobs, where every rank iterates on data batches with the same code (with batch-level barriers).

Figure 2: Logarithm UI features for training systems debugging (Logs shown are for demonstration purposes).

Figure 2: Logarithm UI features for training systems debugging (Logs shown are for demonstration purposes).

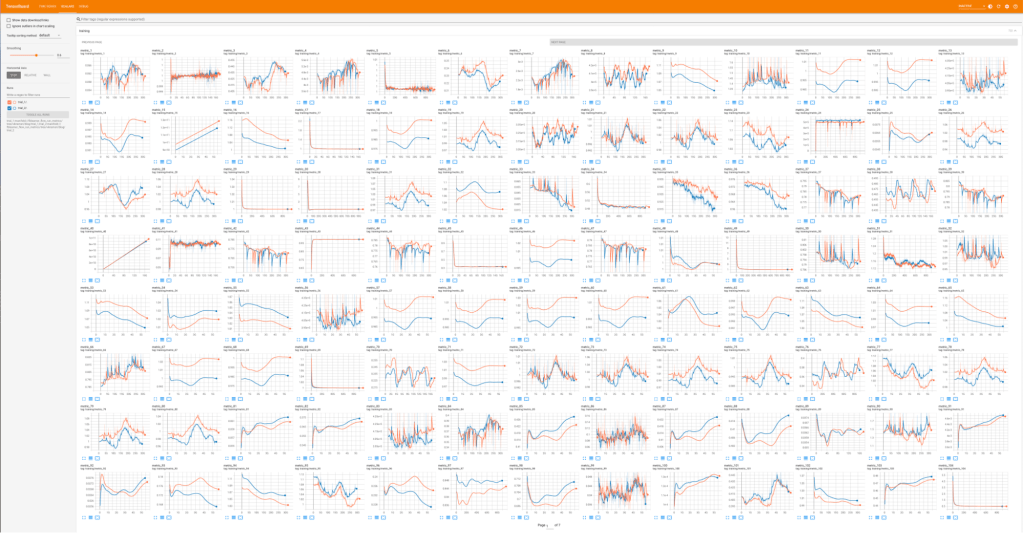

Logarithm ingests continuous model telemetry and summary statistics that span model input and output tensors, model properties (e.g., learning rate), model internal state tensors (e.g., neuron activations) and gradients during training. This powers live training model monitoring dashboards such as an internal deployment of TensorBoard, and is used by ML engineers to debug model convergence issues and training failures (due to gradient/loss explosions) using notebooks on raw telemetry.

Model telemetry tends to be iteration-based tensor timeseries with dimensions (e.g., model architecture, neuron, or module names), and tends to be high-volume and high-throughput (which makes low-cost ingestion in Logarithm a natural choice). Collocating systems and model telemetry enables debugging issues that cascade from one layer to the other. The model telemetry APIs internally write timeseries and dimensions as typed key-value pairs using the Logarithm metadata API. Multimodal data (e.g., images) are captured as references to files written to an external blob store.

Model telemetry dashboards typically tend to be a large number of timeseries visualizations arranged in a grid – this enables ML engineers to eyeball spatial and temporal dynamics of the model external and internal state over time and find anomalies and correlation structure. A single dashboard hence needs to get a significantly large number of timeseries and their tensors. In order to render at interactive latencies, dashboards batch and fan out queries to Logarithm using the streaming API. The streaming API returns results with random ordering, which enables dashboards to incrementally render all plots in parallel – within 100s of milliseconds to the first set of samples and within seconds to the full set of points.

Figure 3: TensorBoard model telemetry dashboard powered by Logarithm. Renders 722 metric time series at once (total of 450k samples).

Figure 3: TensorBoard model telemetry dashboard powered by Logarithm. Renders 722 metric time series at once (total of 450k samples).

Logarithm’s system architecture

Our goal behind Logarithm is to build a highly scalable and fault tolerant system that supports high-throughput ingestion and interactive query latencies; and provides strong guarantees on availability, durability, freshness, completeness, and query latency.

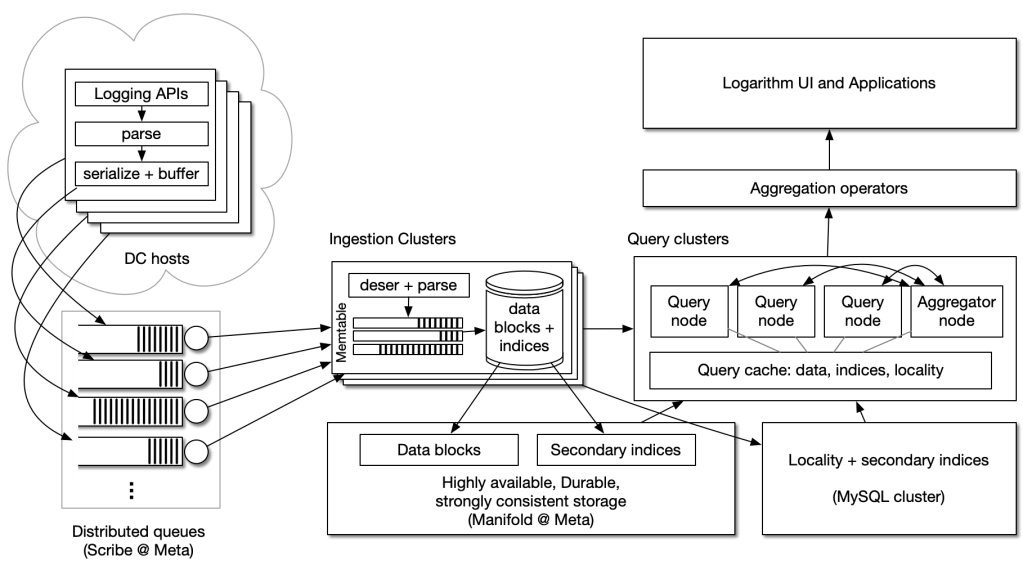

Figure 4: Logarithm’s system architecture.

Figure 4: Logarithm’s system architecture.

At a high level, Logarithm comprises the following components:

- Application processes emit logs using logging APIs. The APIs support emitting unstructured log lines along with typed metadata key-value pairs (per-line).

- A host-side agent discovers the format of lines and parses lines for common fields, such as timestamp, severity, process ID, and callsite.

- The resulting object is buffered and written to a distributed queue (for that log stream) that provides durability guarantees with days of object lifetime.

- Ingestion clusters read objects from queues, and support additional parsing based on any user-defined regex extraction rules – the extracted key-value pairs are written to the line’s metadata.

- Query clusters support interactive and bulk queries on one or more log streams with predicate filters on log text and metadata.

Logarithm stores locality of data blocks in a central locality service. We implement this on a hosted, highly partitioned and replicated collection of MySQL instances. Every block that is generated at ingestion clusters is written as a set of locality rows (one for each log stream in the block) to a deterministic shard, and reads are distributed across replicas for a shard. For scalability, we do not use distributed transactions since the workload is append-only. Note that since the ingestion processing across log streams is not coordinated by design (for scalability), federated queries across log streams may not return the same last-logged timestamps between log streams.

Our design choices center around layering storage, query, and log analytics and simplicity in state distribution. We design for two common properties of logs: they are written more than queried, and recent logs tend to be queried more than older ones.

Design decisions

Logarithm stores logs as blocks of text and metadata and maintains secondary indices to support low latency lookups on text and/or metadata. Since logs rapidly lose query likelihood with time, Logarithm tiers the storage of logs and secondary indices across physical memory, local SSD, and a remote durable and highly available blob storage service (at Meta we use Manifold). In addition to secondary indices, tiering also ensures the lowest latencies for the most accessed (recent) logs.

Lightweight disaggregated secondary indices. Maintaining secondary indices on disaggregated blob storage magnifies data lookup costs at query time. Logarithm’s secondary indices are designed to be lightweight, using Bloom filters. The Bloom filters are prefetched (or loaded on-query) into a distributed cache on the query clusters when blocks are published on disaggregated storage, to hide network latencies on index lookups. We later added support for data blocks in the query cache when executing a query. The system tries to collocate data from the same log stream in order to reduce fan outs and stragglers during query processing. The logs and metadata are implemented as ORC files. The Bloom filters currently index log stream locality and metadata key-value information (i.e., min-max values and Bloom filters for each column of ORC stripes).

Logarithm separates compute (ingestion and query) and storage to rapidly scale out the volume of log blocks and secondary indices. The exception to this is the in-memory memtable on ingestion clusters that buffer time-ordered lists of log streams, which is a staging area for both writes and reads. The memtable is a bounded per-log stream buffer of the most recent and long enough time window of logs that are expected to be queried. The ingestion implementation is designed to be I/O-bound and not compute or host memory bandwidth-heavy to handle close to GB/s of per-host ingestion streaming. To minimize memtable contention, we implement multiple memtables, for staging, and an immutable prior version for serializing to disk. Ingestion implementation follows zero-copy semantics.

Similarly, Logarithm separates ingestion and query resources to ensure bulk processing (ingestion) and interactive workloads do not impact each other. Note that Logarithm’s design uses schema-on-write, but the data model and parsing computation is distributed between the logging hosts (which scales ingestion compute), and optionally, the ingestion clusters (for user-defined parsing). Customers can add additional anticipated capacity for storage (e.g., increased retention limits), ingestion and query workloads.

Logarithm pushes down distributed state maintenance to disaggregated storage layers (instead of replicating compute at ingestion layer). The disaggregated storage in Manifold uses read-write quorums to provide strong consistency, durability and availability guarantees. The distributed queues in Scribe use LogDevice for maintaining objects as a durable replicated log. This simplifies ingestion and query tier fault tolerance. Ingestion nodes stream serialized objects on local SSDs to Manifold in 20-min. epochs, and checkpoint Scribe offsets on Manifold. When a failed ingestion node is replaced, the new node downloads the last epoch of data from Manifold, and restarts ingesting raw logs from the last Scribe checkpoint.

Ingestion elasticity. The Logarithm control plane (based on Shard Manager) tracks ingestion node health and log stream shard-level hotspots, and relocates shards to other nodes when it finds issues or load. When there is an increase in logs written in a log stream, the control plane scales out the shard count and allocates new shards on ingestion nodes with available resources. The system is designed to provide resource isolation at ingestion-time between log streams. If there is a significant surge in very short timescales, the distributed queues in Scribe absorb the spikes, but when the queues are full, the log stream can lose logs (until elasticity mechanisms increase shard counts). Such spikes typically tend to result from logging bugs (e.g., verbosity) in application code.

Query processing. Queries are routed randomly across the query clusters. When a query node receives a request, it assumes the role of an aggregator and partitions the request across a bounded subset of query cluster nodes (balancing between cluster load and query latency). The aggregator pushes down filter and sort operators to query nodes and returns sorted results (an end-to-end blocking operation). The query nodes read their partitions of logs by looking up locality, followed by secondary indices and data blocks – the read can span the query cache, ingestion nodes (for most recent logs) and disaggregated storage. We added 2x replication of the query cache to support query cluster load distribution and fast failover (without waiting for cache shard movement). Logarithm also provides a streaming query API with randomized and incremental sampling that returns filtered logs (an end-to-end non-blocking operation) for lower-latency reads and time-to-first-log. Logarithm paginates result sets.

Logarithm can tradeoff query result completeness or ordering to maintain query latency (and flag to the client when it does so). For example, this can be the case when a partition of a query is slow or when the number of blocks to be read is too high. In the former, it times out and skips the straggler. In the latter scenario, it starts from skipped blocks (or offsets) when processing the next result page. In practice, we provide guarantees for both result completeness and query latency. This is primarily feasible since the system has mechanisms to reduce the likelihood of root causes that lead to stragglers. Logarithm also does query admission control at client or user-level.

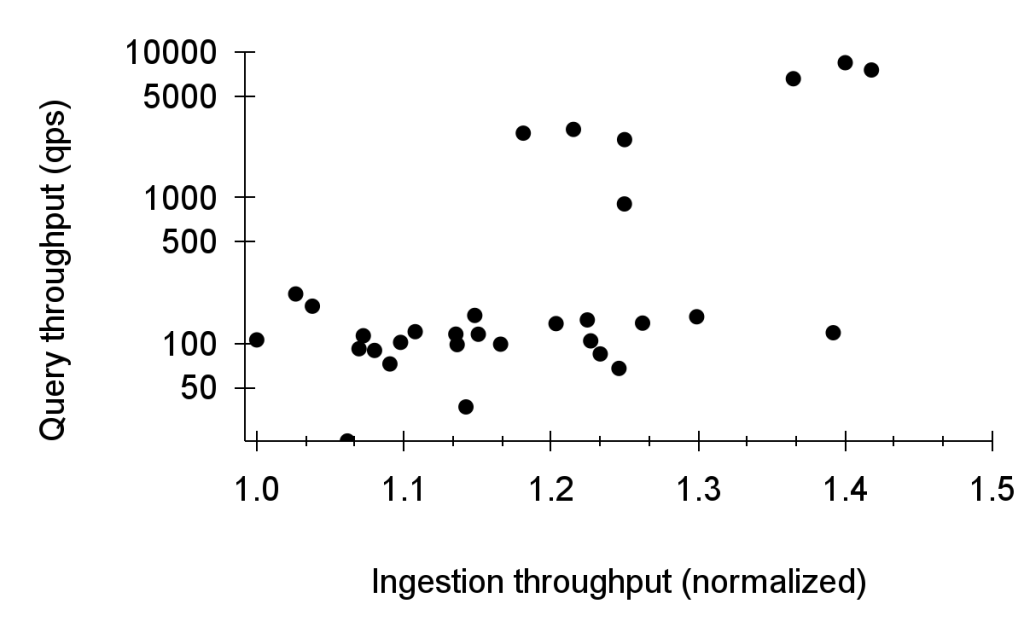

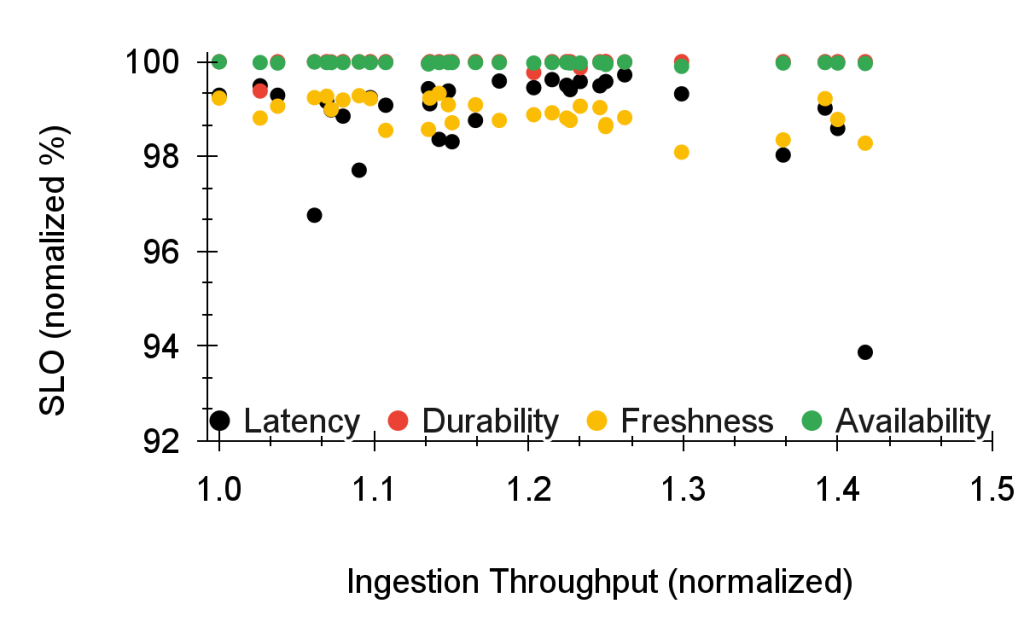

The following figures characterize Logarithm’s aggregate production performance and scalability across all log streams. They highlight scalability as a result of design choices that make the system simpler (spanning disaggregation, ingestion-query separation, indexes, and fault tolerance design). We present our production service-level objectives (SLOs) over a month, which are defined as the fraction of time they violate thresholds on availability, durability (including completeness), freshness, and query latency.

Figure 5: Logarithm’s ingestion-query scalability for the month of January 2024 (one point per day).

Figure 5: Logarithm’s ingestion-query scalability for the month of January 2024 (one point per day).

Figure 6: Logarithm SLOs for the month of January 2024 (one point per day).

Figure 6: Logarithm SLOs for the month of January 2024 (one point per day).

Logarithm supports strong security and privacy guarantees. Access control can be enforced on a per-log line granularity at ingestion and query-time. Log streams can have configurable retention windows with line-level deletion operations.

Next steps

Over the last few years, several use cases have been built over the foundational log primitives that Logarithm implements. Systems such as relational algebra on structured data and log analytics are being layered on top with Logarithm’s query latency guarantees – using pushdowns of search-filter-sort and federated retrieval operations. Logarithm supports a native UI for interactive log exploration, search, and filtering to aid debugging use cases. This UI is embedded as a widget in service consoles across Meta services. Logarithm also supports a CLI for bulk download of service logs for scripting analyses.

The Logarithm design has centered around simplicity for scalability guarantees. We are continuously building domain-specific and agnostic log analytics capabilities within or layered on Logarithm with appropriate pushdowns for performance optimizations. We continue to invest in storage and query-time improvements, such as lightweight disaggregated inverted indices for text search, storage layouts optimized for queries and distributed debugging UI primitives for AI systems.

Acknowledgements

We thank Logarithm team’s current and past members, particularly our leads: Amir Alon, Stavros Harizopoulos, Rukmani Ravisundaram, and Laurynas Sukys, and our leadership: Vinay Perneti, Shah Rahman, Nikhilesh Reddy, Gautam Shanbhag, Girish Vaitheeswaran, and Yogesh Upadhay. Thank you to our partners and customers: Sergey Anpilov, Jenya (Eugene) Lee, Aravind Ram, Vikram Srivastava, and Mik Vyatskov.

Comments are closed.