ELI5: Captum – Simply Interpret AI Fashions

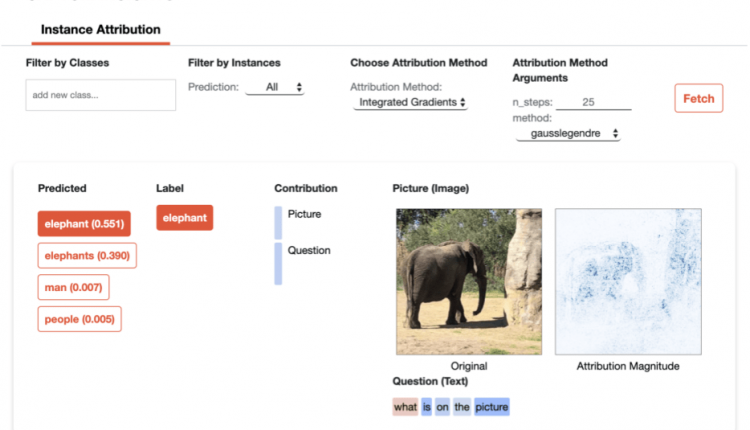

Since models can be difficult to interpret without proper visualization, Captum comes with a web interface called Captum Insights that works across images, text, and other functions to help users easily visualize and understand the function mapping.

We recommend Captum model developers who want to improve their work, interpretability researchers who focus on multimodal models, and application engineers who use trained models in production.

Where is Captum used?

Captum was made available as open source during the PyTorch Developer Conference in mid-October 2019.

Within Facebook, we use Captum to better understand how our AI models interpret profiles and other Facebook pages, which often contain a variety of text, audio, images, videos and linked content.

Where can I find out more?

To learn more about Captum, visit the website, which has documentation, tutorials, and API references. Captum’s Github repository has step-by-step instructions for installing and getting started.

If you have any further questions about Captum, please let us know on our YouTube channel or on Twitter. We always want to hear from you, and we hope you find this open source project and the new ELI5 series useful.

About the ELI5 series

In a series of short videos (approx. 1 minute long) one of our developer advocates in the Facebook Open Source Team explains a Facebook Open Source project in an easy-to-understand and user-friendly way.

For each of these videos we will write an accompanying blog post (like the one you are reading now), which you can find on our YouTube channel.

To learn more about Facebook Open Source, visit our open source site, subscribe to our YouTube channel, or follow us on Twitter and Facebook.

Interested in working with open source on Facebook? Check out our open source related job postings on our careers page by taking this short survey.

Comments are closed.